migsi 2 application (2017-19)

MIGSI Application History

The MIGSI 2 Application was developed primarily to act as a general-purpose toolkit for making music with MIGSI, a Minimally Invasive Gesture Sensing Interface for trumpet, co-developed by myself and performer-composer Sarah Belle Reid. It includes tools for synthesis, audio processing, spatialization, and—importantly—for creating complex control structures based on input from the sensors on the MIGSI hardware. To date, the MIGSI 2 Application is the most extensive single general-purpose synthesis toolkit I have built.

When first completed in 2015, the MIGSI hardware was paired with some fairly bare-bones software tools: one Max/MSP patch which directly translated all of its sensor data into OSC messages for use in other software, and another slightly more fully-featured patch which allowed for some amount of data scaling/conditioning prior to OSC formatting (the MIGSI 1 Application). For the first couple of years we used MIGSI, performances were based around unique software patches/pieces—the OSC interfacing software would run in the background and send data to another local program, typically built in Max or Pure Data, which would perform the bulk of the synthesis/audio-related tasks for a given piece.

The process of building new patches for every piece was tiresome, but important at first—we needed a way to learn what we wanted to do with MIGSI, as it was a completely new performance paradigm for both of us. We gradually learned some basic, generally useful ways of mapping MIGSI’s sensor data. Concurrently, I was experimenting with various chaotic synthesis techniques and ideas for dealing with modularity in Max/MSP patches (in patches like TCO, DTCO, MCI, Virtual 200, etc.). Eventually, we had the idea to combine our growing insights about MIGSI’s potential uses with some of my more original chaotic synthesis structures using a relatively modular software structure with global state storage/recall—in order to simplify the processes of iteration, experimentation, and the creation of new pieces of music.

The application was gradually expanded/modified from some point in 2017 to some point in 2019 or so—across which time period our ideas about how to use MIGSI developed considerably. It wouldn’t be possible, timely, or terribly interesting to go into depth here about each of the dozen or more incremental iterations of the MIGSI 2 Application, so what follows is an overview of the program’s final state.

MIGSI 2 Application Concepts + Overview

In terms of general concept—the MIGSI 2 Application features a semi-modular structure. As in Buchla’s modular instruments, signals are separated into multiple types: audio signals, control signals, and timing pulse signals. Control signals are further separated into two types: data from the MIGSI hardware, and data generated within the software itself. Generally speaking, the audio routing is fixed, using mixing stages at the input of each processor that allows for processing of signals for any/all of the program’s sound sources through any processor. Control and pulse signals are treated in a more modular fashion, using drop-down menus to select control/pulse sources at each control/pulse destination. Control signal menus are blue; pulse signal menus are red. Yellow menus represent data collected from the MIGSI hardware itself, or conditioned alterations of this data—more on this shortly.

Instrument Definition storage & recall system

All parameter settings and routing settings are stored as part of Instrument Definitions, which, as in TCO and other applications, are organized into .JSON files of ten Instrument Definitions each. Typically, a “piece” that uses the MIGSI 2 Application is organized into ten or fewer instrument definitions, which may be arbitrarily selected by the performer at any given time using MIDI or QWERTY keyboard-based commands. Alternatively, an instrument definition may itself include rules/conditions under which it may programmatically switch to another instrument definition within the current bank, with any amount of predictability or unpredictability equally possible.

When you open the MIGSI 2 Application, you’re presented the “main” window, where all audio generation and processing takes place. This screen is divided into various submodules—External Input, Granular Processor, Temporal Reflection Interval Processor, two Temporal Conduit Oscillators, a Polyphonic Granular Facility, a master signal mixer/spatial effect processor, master level controls/recording controls, an Instrument Definition management section, a Bus Access menu structure, and a section dedicated to navigation to all other windows/views: M Edit, Monitor, Control Editor, Pulse Editor, CV Editor, OSC Editor, Aux Editor, and Quad Editor. Let’s take a look at these windows, starting with M Edit—the window dedicated to interfacing with the MIGSI hardware.

MIGSI Hardware Interfacing

M Edit is an abbreviated form of MIGSI Edit—this is the window in which MIGSI is calibrated and all of its sensor data is scaled/sloped/conditioned into forms we found generally useful for different parts of the application.

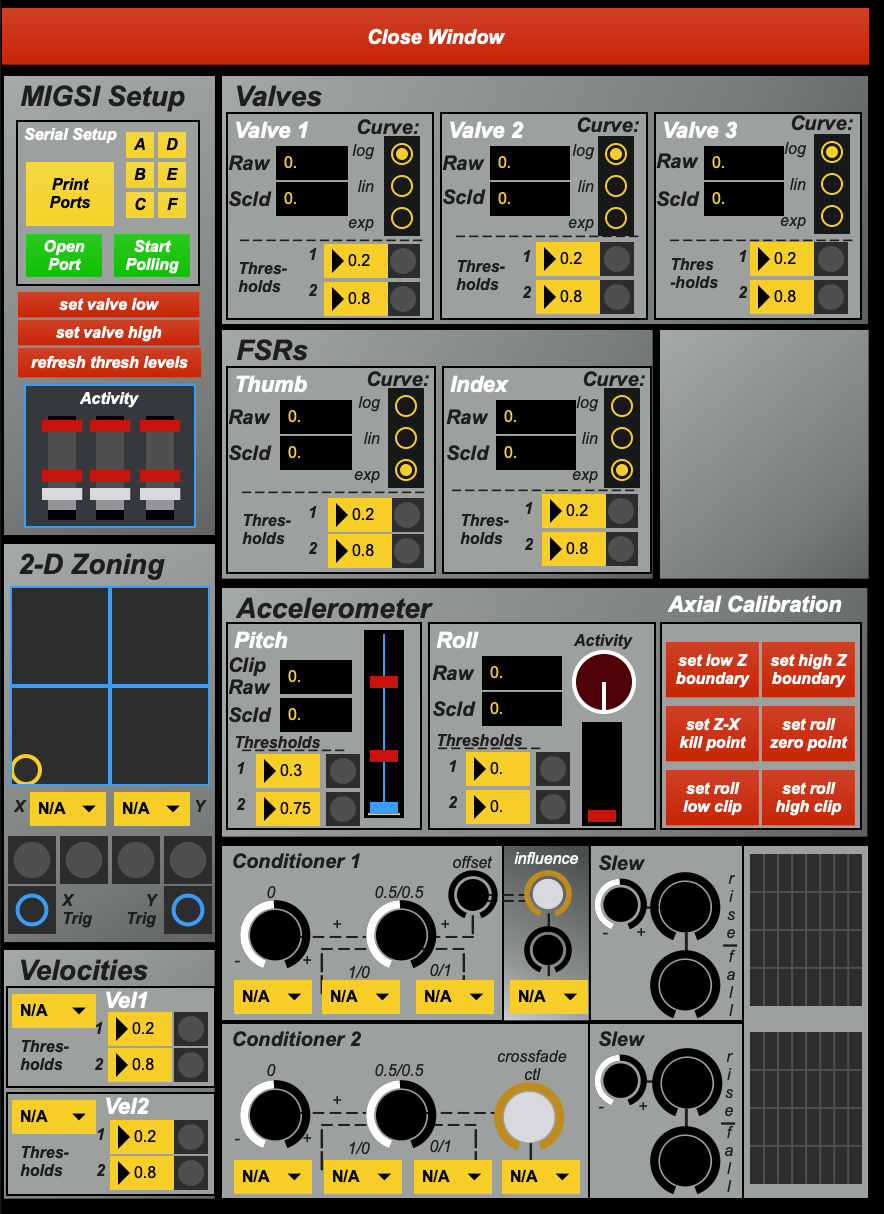

MIGSI Edit window from the MIGSI 2 Application

This window includes scaling/curve shape controls for each individual valve sensor and both FSRs. In addition to their continuous data output, these sensors are all afforded several pulse outputs—two comparator outputs, based on the scaled sensor value breaching individual user-definable Thresholds, and two momentary trigger/pulse outputs based on each of these thresholds (one that fires when the threshold in question is breached in a positive-going direction, and another when it is breached in a negative-going direction). Ultimately, each of these raw sensors produces nine data streams: the scaled raw data, the two comparators used as control data, the two comparators used as pulse data, and one “ascending” and “descending” pulse for each of the two comparators.

MIGSI’s accelerometer has a similar comparator/threshold breaching outputs for both pitch and roll. Unlike the valves and FSRs, the accelerometer data (which is much more tied to some basic realities of space, and as such the performer’s level of comfort/control while playing) can be clipped at multiple points along each axis, allowing for “dead zones”—making it such that it only takes effect when the horn is positioned in a particular way. This way, unwanted effects do not occur when physically engaging with the horn in “extra-musical”/practical manners, e.g. picking it up, setting it down, emptying water from the water keys during performance, etc.

Two data Conditioners (shown below, inspired by the Buchla 257 Dual Voltage Processor) can be used to mix/balance and dynamically scale/crossfade between data from each sensor—for instance, making it possible to pass accelerometer data only when an FSR is depressed, or to pass a sum of all valve data only when the horn is in a particular position, etc. Each conditioner also features a slew limiter with variable rise and fall time—allowing us to create parameterizations based on the general level of activity/persistence of activity from any combination of sensors. Moreover, this detected persistence could then be used to affect the impact of other sensor data on different parts of the patch.

The final additions to the MIGSI Edit page were the 2-D Zoning section and Velocities. 2-D Zoning allowed use of any of the data from the raw sensors, conditions, comparators, or velocities to be mapped to a 2-D grid’s x and y axes. Ultimately, it produced six pulse outputs: one pulse output for each quadrant, and individual triggers for when the midpoint of the X or Y axes were breached. Ultimately, this was another form of multi-dimensional comparator, and could be used to create multiple simultaneous Boolean logic functions based on two input signals.

The Velocities section contained two identical processors, which could accept input from the raw sensors or data conditioners. At its core, each of these processors produces the first derivative of its input: a signal that corresponds to the instantaneous rate of change of its input. When used to process a Valve’s raw data, for instance, a Velocity processor would produce what its name implies: a signal corresponding to the velocity of valve movement. By using the second Velocity processor to process the first, you could access the second derivative of the input: acceleration. This section contrasts the slew limiters in the data conditioners. The slew limiters act as lowpass filters, only passing data when it has been relatively slow or persistent; the Velocity processors, on the other hand, pass data only when it is particularly fast or transient. Like the valves and FSRs, the Velocity processors have many pulse outputs based on two comparators (and again, detection of whether these thresholds are breached in a positive-going or negative-going fashion).

Ultimately, data from the MIGSI Edit window is passed to the main window via the Bus Access menus—allowing access to ten simultaneous control data streams and ten simultaneous pulse data streams. In practice, this was always more than sufficient for creating interesting interactions between the trumpet and electronic sound generation/processing. (Worth note: the yellow Bus Access menus also allow input from eight fixed incoming OSC messages, making it possible to send data to the Bus from other software or computers. From there, these Bus messages are treated identically to control signals from MIGSI or the rest of the software itself.)

So, that’s it for the MIGSI Edit window—but what of all the other menus? Well, the Monitor window is simple enough: it’s a realtime display of the valve and FSR data, just to give the performer peace of mind that MIGSI is, in fact, connected and sending data.

Control Editor + Control Processing

The Control Editor is a bit more complex. It contains an Envelope Follower, a Preset Data Source, four Envelopes, five Random data sources, and multiple Control Modifiers. When compared to a modular synthesizer, these are like MIGSI 2 Application’s control voltage sources.

The Preset data source has six control outputs, with eight total states for all outputs. The user can edit the values for each output on each stage, and may use another control signal in order to scan/address the table of values. It offers fixed control of stage-to-stage interpolation time, which affects all outputs equally. This was a simple way of performing “macro”-style control from a single data/control source.

The Envelope Follower is as its name implies: an envelope follower with continuously variable gain as well as rise and fall times. It could produce a continuous envelope output based on any combination of signals from TCO1, TCO2, the Granular Processor, or the TRIP. It contained an integrated comparator with variable threshold control.

The Envelopes section contains four envelopes—two Attack-Decay/Attack-Release envelopes (borrowed from Virtual 200), and two Attack-Decay envelopes with skew/timebase parameterization. Each envelope generator could be set to loop if triggering it from its own end-of-cycle pulse output. The Random section contained two fluctuating random sources (with dynamic rate control), two Sample and Holds, and an “N+1” random voltage generator inspired by the Buchla 266 Source of Uncertainty. All key parameters in both the Envelopes and Random sections could be dynamically controlled by other control data sources (as evidenced by the multitude of blue menus).

The Control Modifiers section is similar to the data conditioning in the MIGSI Edit window. The two primary Control Processors act as mixers/crossfaders; the first can additionally perform VCA-like functions/multiplication and slewing, and the second can perform dynamic crossfading between control signals. The second also implements a control delay: a delay line with feedback and dynamic control of delay time, used specifically to temporally decouple a modulation source from its audible impact.

The control Modifiers section also featured two control Inverters. The final addition to this section was two “Window” processors: function with user-definable max/min clipping values, which would then expand the “permitted” range to a full-range control signal. This became a useful tool for permitting, say, an envelope follower to only modulate delay time when a signal was between 60–75% its maximum loudness, etc. By combining all of these processing functions together, it is possible to do all sorts of peculiar tricks: creating irregular transfer functions, temporal displacement, comparison of past and present values, allowing the past to impact the present only under specified conditions, etc.

Pulse Editor + Pulse Processing

The Pulse Editor/Pulse Processing window allows a similar modular approach to generating, commingling, and conditioning timing pulses. It contains two Pulse Counters, a Pulser, a Pulse Mixer, a Pulse Delay, two Comparators, an Only After processor, an Only While (AND) processor, and a Flip Flop.

The Pulse Counters are ten-stage counters which advance or reset when pulses are sent to their respective “pulse” and “reset” inputs. The counters may have any number of stages between two and ten. A line of buttons allows the user to determine whether a pulse is produced at each specific stage of the count, allowing the creation of pulse sequences/patterns, or to cause events to set into motion only after “X” number of iterations of an incoming event. Think of it as a way of, potentially, filtering moments.

The Pulser is a simple metronomic pulse generator which can be used to trigger any pulse-activated function in the software. The Flip Flop is a simple pulse-activated toggle function. The Pulse Mixer allows you to send pulses from up to three pulse sources to a single pulse destination. The Only While processor is a logical AND between two pulse sources; the Only After processor allows the top pulse to pass only if a pulse has previously been received at the bottom input (the bottom input state is reset upon receipt of a pulse at the top input).

The Comparators allow for pulse generation through comparison of two control signals, which may originate from the MIGSI Bus, the Control Edit window, or from a few special places in the main window. Alternatively, you can use a dedicated knob for defining a fixed comparison threshold. These are useful for creating multiple distinct pulses from an envelope follower, for converting TCO control data into pulses, for creating irregular timing pulses from the Fluctuating Random generators, etc.

Lastly, the Pulse Delay acts much like the Control Delay—allowing for combining of two pulse signals, which may be delay by a variable (and dynamically controllable) time interval. Feedback may be generated in the Pulse Delay by choosing its own output as one of the two scale-able inputs. This is yet another way of temporally dislocating stimulus and response across the entire software.

Control Voltage + OSC Interfacing

The CV Editor and OSC Editor windows are quite simple. The CV Editor is intended for use with an Expert Sleepers ES-8 DC-coupled Eurorack-format audio interface; it allows you to send any combination of eight control signals or pulse signals from the MIGSI 2 Application to a modular synthesizer. The OSC Editor allows you to send up to eight control signals via OSC to up to four IP/Port destinations, making it simple to use MIGSI as part of a network or telematic performance.

Let’s diverge now, and get deeper into the details about MIGSI’s audio generation and processing. Then, we’ll return to discuss the Aux Editor and Quad Editor windows.

audio workflow

Designed primarily as a toolkit for accompanying trumpet with the MIGSI hardware interface, MIGSI’s audio workflow is comprised of an external audio input, multiple audio processors, and multiple sound generators—all optimized to respond to data from the MIGSI hardware, audio feature detection, and data generated from within the application. Generally speaking, sound generators and processors were designed such that significant tonal/timbral/textural changes could be induced with relatively simple input from external sources, maximizing the potential for control mappings. Often, “macro”-style control is built into each device in one way or another.

The MIGSI app’s primary audio input (usually from a trumpet) features a dedicated gain control, a dedicated envelope follower, and a dedicated variable-width bandpass filter and frequency shifter. The bandpass filter and frequency shifter can be automated through the use of any “blue-menu” control signals. A dedicated “solo” state can be used to mute all sound generators aside from the external input in order to test audio levels during setup.

One of the first audio processors added to the MIGSI 2 Application was the Granular Processor—a three-voice sampler/playback system. Nearly every parameter (speed, start position, size, stereo spread, grain timing—aka “spread”) could be modulated, and additionally featured per-grain randomization index controls. “Glisson index” introduced speed modulation via the same envelope used to shape the grain amplitude (an attack-decay envelope with variable skew, aka “shape”). The Granular processor featured its own output bandpass filter, and could be activated/deactivated and globally randomized via red-menu timing pulse signals. Commonly, the envelope follower would be passed through a comparator, for instance, to randomize the granular state.

Importantly, the granular processor—and all later processors—features audio routing controls at its input. Acting like an effect send level, these controls establish a proportional input balance from the external input, both TCOs, and the TRIP. Moreover, it is possible to continuously fade between this group of sound sources and the Granular Processor’s own output as an input source, creating a sort of granular feedback when continually recording. Recording may be manually activated and paused as desired, and the recording buffer size may be set according to user specification.

The final versions of the MIGSI 2 Application feature a polyphonic implementation of TRIP, which includes an attack-decay envelope generator, per-voice parameter randomization, and a switch to generally prioritize low-frequency and high-frequency sounds—generally resulting in more chaotic, unruly sounds and tonal/quasi-tonal results, respectively. Any incoming pulse and control source could be used to determine the envelope onset times and rough pitch range, and an internal bus provided modulation access to all parameters from any blue-menu control signal. Ultimately, I’m unsure that this implementation of TRIP was a great success, but we added it nonetheless. It was used in only a handful of performances and recordings.

More consistently used, though, were the MIGSI 2 Application’s dual Temporal Conduit Oscillators (TCOs). In fact, the creation of the TCO was one of the developments that originally started me down the path of building a general-purpose performance/composition software environment altogether—you can read more about this in the pages detailing the original TCO and the Dual TCO.

Each of MIGSI’s TCOs is slightly different from the versions found in the original TCO and Dual TCO patches. In addition to the “standard” TCO features, each features a variable-width bandpass filter and a lowpass gate on the output, making them somewhat better-suited to acting as articulated sound sources, and providing considerably stronger/more focused ability to reign in their harshness (listen above to an improvisation focused on the two TCOs and granular processor for a sense of how they might sound in context). Additionally, MIGSI’s TCOs offer an additional control bus for modulating the Conduit Shape Animation and Conduit Flux Processing processes, as well as dedicated busses for modulating the Animation Generator and Conduit Exciter’s frequencies, respectively.

The state randomization and reset (Panic) controls found in other versions of the TCO could be activated via red-menu timing pulse signals. Commonly, two logically opposed threshold detection signals from one of MIGSI’s FSRs would be used to address these features—for instance, randomizing the TCO’s state when the upper threshold was breached in the ascending direction, and resetting the TCO’s state when the lower threshold was breached in the descending direction…turning the FSR into a “momentary button” of sorts, in which the depressed state would be some random, chaotic TCO tone, and the un-depressed state would be silent.

TCO brought the MIGSI 2 Application its own concepts about dealing with time and chaos, and in many ways acted as a structural center to the app: defining the general workflow/sound used in most MIGSI-based compositions and performances.

One of the final additions to the MIGSI 2 Application was the Polyphonic Granular Facility—an alternate style of granular processor better-suited to working with externally loaded audio files, long buffer sizes, and considerably higher polyphony. In practice, the Granular Processor was typically used for more animated, gestural sounds and processing live input, whereas the Polyphonic Granular Facility was generally used for more continuous/textural sounds and granular sounds based on pre-recorded audio files. Generally speaking, the parameterization of the Polyphonic Granular Facility is quite similar to the Granular Processor—with the addition of an “arpeggiator” of sorts, per-grain randomization of grain shape, and a couple of other subtle details.

To my knowledge, no recordings were produced using the Polyphonic Granular Facility—though a standalone version of this granular processor was used frequently in 2018 and 2019 for various projects. It made appearances in many Burnt Dot performances; and an octaphonic version played a key role in Sarah Belle Reid’s Timepiece and The Sameness of Earlier and Later Times and Nows.

In most situations, audio in the MIGSI application exits the program via the final output mixer, which offers level, pan, and reverb controls for each signal source. One parameter of each sound source’s dedicated channel may be modulated (inspired by the Buchla 212 Dodecamodule).

Alongside the output mixer is a Buchla 277-inspired simple multi-tap delay (originally lifted directly from the Virtual 200 application). This delay includes a variable frequency shifter on each tap, variable feedback amount from each tap, and a single lowpass gate that manages the global feedback level. Additionally, it could be kicked into longer time ranges and set to “infinite” mode, in which it acted like a crude looper. This delay can be heard in many places on Julia Holter’s album Aviary, on which Sarah Belle Reid performed trumpet and electronics (courtesy of the MIGSI 2 Application).

Auxiliary Input Editor

The Auxiliary Input Edit window (Aux Edit) is used to manage signal levels, panning, and effect amounts for external inputs assigned via ADC channels 2, 3, and 4. This enables external instruments to be mixed within the application, and to be processed via the Granular Processor, Delay, or Polyphonic Granular Facility.

This menu was one of the final additions to the MIGSI 2 Application—it was actually finalized in order to simplify the setup for a live stream performance I gave as part of the PowWow! concert series, performing on the MIGSI App and the CalArts Black Serge system. It continued to be used in Sarah’s own explorations with hardware synthesizers in conjunction with the app.

Quadraphonic Mixer / Output Editor

One of the final additions to the MIGSI 2 Application was a quadraphonic output mixer. It featured four quadraphonic panning channels with assignable inputs—which could come from any audio source in the program (including the outputs of the stereo mixer on the main page). Each channel offered two modulation inputs for gain, angle and separation.

The individual channels all used a combination of polar and Cartesian panning schemes. Panning modulation is always polar: it uses angle of rotation and divergence/separation from “center” as its primary location controls. However, each of the four outputs of this polar panning process itself had a dedicated Cartesian panner, visualized as an X/Y display. So, each channel could be contorted within quadraphonic space, with the “polar” panning scheme used to determine the position of the incoming sound along a 4-point trajectory within the quadraphonic space.

The polyphonic granular synthesis engine had its own special panning scheme in the quadraphonic mixing page, with the ability to randomize the Cartesian panning of four discrete outputs. Additionally, the granular panning included a bounds/bounce generator inspired by Peter Blasser, in which the four outputs from the granular engine could continuously “bounce” around the space within established boundaries. Their position relative to these boundaries could be randomized in a variety of ways.

Ultimately, the quadraphonic capabilities in the MIGSI 2 Application were explored very little, though many of the underlying concepts (especially arbitrary and algorithmic “trajectory generation”) have been explored in other contexts—most recently in the map14 Spatial Dislocation Processor.